The Reachy Mini Hand Tracker app

Image source: https://youtu.be/JvdBJZ-qR18?si=w0-eoZNezfHA0L3c

In this article, we will dive into the code that enables the Reachy Mini robot to track hand movements by moving its head and centering its gaze on the hand.

Want to Stay in the Loop? If you would like to be the first to know when new tutorials, SDK updates, or documentation drops—pop your email at the bottom of the page and we will keep you posted.

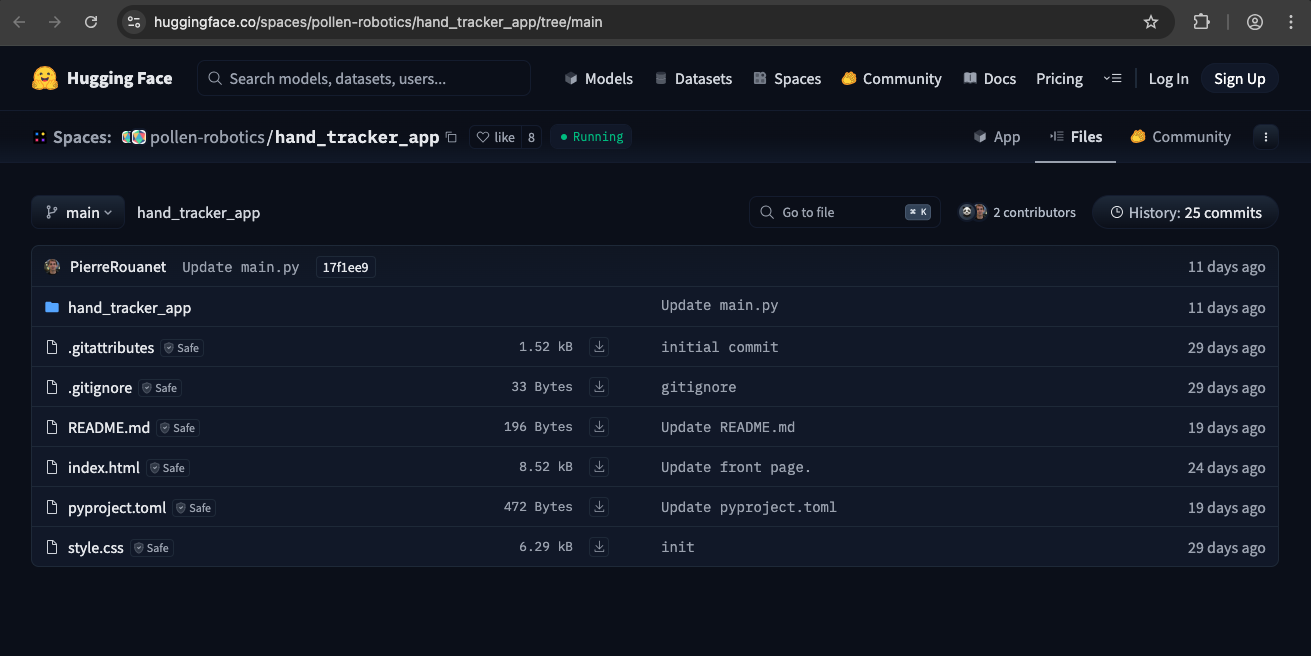

Where is the code?

It is available as a Hugging Face “space” at https://huggingface.co/spaces/pollen-robotics/hand_tracker_app/tree/main.

You can also download the code by cloning the repository.

How to run the code?

At the time of publishing this article, there is no SDK or simulator available to run the code.

However, there is still a lot we can learn from it!

Directory structure

hand_tracker_app/

├── index.html

├── style.css

├── pyproject.toml

├── README.md

└── hand_tracker_app/

├── __init__.py

├── hand_tracker.py

└── main.py

index.html and style.css - Web page that appears on the Hugging Face space to introduce this project.

pyproject.toml - Standardized configuration file used in Python projects to define build system requirements, project metadata, and tool-specific settings.

README.md - Configuration as specified in https://huggingface.co/docs/hub/en/spaces-config-reference

main.py and hand_tracker.py - Where the magic happens.

Main.py

https://huggingface.co/spaces/pollen-robotics/hand_tracker_app/blob/main/hand_tracker_app/main.py

This file serves as the entry point for the application.

The HandTrackerApp class inherits from ReachyMiniApp and contains the main application logic:

Checks if there is a hand detected

If there is, calculate how much head movement is required to make the hand appear in the center of its vision

Apply the head movement

Below are some additional comments to the code.

class HandTrackerApp(ReachyMiniApp):

..

def run(self, reachy_mini: ReachyMini, stop_event: threading.Event):

# Get the camera. `cap` is a "VideoCapture" object.

cap = find_camera()

..

# Create a HandTracker object from hand_tracker.py

hand_tracker = HandTracker()

# Initialize the head pose to the 4x4 identity matrix.

# Initialize the Euler rotation to 0.

# Aka some magic maths to make the magic happen.

head_pose = np.eye(4)

euler_rot = np.array([0.0, 0.0, 0.0])

while not stop_event.is_set():

# Read an image from the camera.

success, img = cap.read()

..

# Get the hands positions from the image.

hands = hand_tracker.get_hands_positions(img)

if hands:

# Assuming we only track the first detected hand

hand = hands[0]

# Draw the hand on the image.

# This probably happens when running the app in a debug mode.

if gui:

draw_hand(img, hand)

# Calculate the difference between hand location and the center of vision.

error = np.array([0, 0]) - hand

error = np.clip(

error, -self.max_delta, self.max_delta

) # Limit error to avoid extreme movements

# Update the Euler rotation.

euler_rot += np.array(

[0.0, -self.kp * 0.1 * error[1], self.kp * error[0]]

)

# Calculate the required head pose.

head_pose[:3, :3] = R.from_euler(

"xyz", euler_rot, degrees=False

).as_matrix()

head_pose[:3, 3][2] = (

error[1] * self.kz

) # Adjust height based on vertical error

# Apply the calculated head pose.

reachy_mini.set_target(head=head_pose)

..

hand_tracker.py

The Hand Tracker class is given a static image (a frame from the camera feed, passed from main.py), and uses the Google library MediaPipe to detect the hand positions.

It calculates and returns the center of the palm.

Reflections

It is impressive how just a small amount of code can accomplish so much—thanks to the powerful functionalities provided by the project dependencies.

With the Hugging Face/Pollen Robotics platform, configuring these dependencies is straightforward. In this app, it is simply specifying mediapipe and reachy_mini in the pyproject.toml file, and adding the import statements in the python files.

The possibilities on the Reachy Mini platform are truly exciting! Can’t wait to get it!!

That’s all for now! Do you have specific questions? Ask in the comments below. 🤖✨